Standard Bars

When analyzing financial data, unstructured data sets, in this case tick data, are commonly transformed into a structured format referred to as bars, where a bar represents a row in a table. MlFinLab implements tick, volume, and dollar bars using traditional standard bar methods as well as the less common information driven bars.

For those new to the topic, it is discussed in the graduate level textbook: Advances in Financial Machine Learning, Chapter 2.

Note

Underlying Literature

The following sources elaborate extensively on the topic:

-

Advances in Financial Machine Learning, Chapter 2 by Marcos Lopez de Prado.

The four standard bar methods implemented share a similar underlying idea in that they take a sample of data after a certain threshold is reached and they all result in a time series of Open, High, Low, and Close data.

-

Time bars, are sampled after a fixed interval of time has passed.

-

Tick bars, are sampled after a fixed number of ticks have taken place.

-

Volume bars, are sampled after a fixed number of contracts (volume) has been traded.

-

Dollar bars, are sampled after a fixed monetary amount has been traded.

These bars are used throughout the text book (Advances in Financial Machine Learning, By Marcos Lopez de Prado, 2018, pg 25) to build the more interesting features for predicting financial time series data.

Tip

A fundamental paper that you need to read to have a better grasp on these concepts is: Easley, David, Marcos M. López de Prado, and Maureen O’Hara. “The volume clock: Insights into the high-frequency paradigm.” The Journal of Portfolio Management 39.1 (2012): 19-29.

Tip

A threshold can be either fixed (given as

float) or dynamic (given as pd.Series). If a dynamic threshold is used then there is no need to declare threshold for every observation. Values are needed only for the first observation (or any

time before it) and later at times when the threshold is changed to a new value. Whenever sampling is made, the most recent threshold level is used.

An example for volume bars We have daily observations of prices and volumes:

Time |

Price |

Volume |

|---|---|---|

20.04.2020 |

1000 |

10 |

21.04.2020 |

990 |

10 |

22.04.2020 |

1000 |

20 |

23.04.2020 |

1100 |

10 |

24.04.2020 |

1000 |

10 |

And we set a dynamic threshold:

Time |

Threshold |

|---|---|

20.04.2020 |

20 |

23.04.2020 |

10 |

The data will be sampled as follows:

-

20.04.2020 and 21.04.2020 into one bar, as their volume is 20.

-

22.04.2020 as a single bar, as its volume is 20.

-

23.04.2020 as a single bar, as it now fills the lower volume threshold of 10.

24.04.2020 as a single bar again.

Time Bars

These are the traditional open, high, low, close bars that traders are used to seeing. The problem with using this sampling technique is that information doesn’t arrive to market in a chronological clock, i.e. news events don’t occur on the hour - every hour.

It is for this reason that Time Bars have poor statistical properties in comparison to the other sampling techniques.

- get_time_bars(file_path_or_df: str | Iterable[str] | DataFrame, resolution: str = 'D', num_units: int = 1, batch_size: int = 20000000, verbose: bool = True, to_csv: bool = False, output_path: str | None = None)

-

Creates Time Bars: date_time, open, high, low, close, volume, cum_buy_volume, cum_ticks, cum_dollar_value.

- Parameters:

-

-

file_path_or_df – (str, iterable of str, or pd.DataFrame) Path to the csv file(s) or Pandas Data Frame containing raw tick data in the format[date_time, price, volume].

-

resolution – (str) Resolution type (‘D’, ‘H’, ‘MIN’, ‘S’).

-

num_units – (int) Number of resolution units (3 days for example, 2 hours).

-

batch_size – (int) The number of rows per batch. Less RAM = smaller batch size.

-

verbose – (int) Print out batch numbers (True or False).

-

to_csv – (bool) Save bars to csv after every batch run (True or False).

-

output_path – (str) Path to csv file, if to_csv is True.

-

- Returns:

-

(pd.DataFrame) Dataframe of time bars, if to_csv=True return None.

Example

>>> from mlfinlab.data_structures import time_data_structures

>>> # Get processed tick data csv from url

>>> tick_data_url = "https://raw.githubusercontent.com/hudson-and-thames/example-data/main/processed_tick_data.csv"

>>> time_bars = time_data_structures.get_time_bars(

... tick_data_url, resolution="D", verbose=False

... )

>>> time_bars

tick_num...

date_time...

2023-03-02...

Tick Bars

- get_tick_bars(file_path_or_df: str | Iterable[str] | DataFrame, threshold: float | Series = 70000000, batch_size: int = 20000000, verbose: bool = True, to_csv: bool = False, output_path: str | None = None)

-

Creates the tick bars: date_time, open, high, low, close, volume, cum_buy_volume, cum_ticks, cum_dollar_value.

- Parameters:

-

-

file_path_or_df – (str/iterable of str/pd.DataFrame) Path to the csv file(s) or Pandas Data Frame containing raw tick data in the format[date_time, price, volume].

-

threshold – (float/pd.Series) A cumulative value above this threshold triggers a sample to be taken. If a series is given, then at each sampling time the closest previous threshold is used. (Values in the series can only be at times when the threshold is changed, not for every observation).

-

batch_size – (int) The number of rows per batch. Less RAM = smaller batch size.

-

verbose – (bool) Print out batch numbers (True or False).

-

to_csv – (bool) Save bars to csv after every batch run (True or False).

-

output_path – (str) Path to csv file, if to_csv is True.

-

- Returns:

-

(pd.DataFrame) Dataframe of volume bars.

Example

>>> from mlfinlab.data_structures import standard_data_structures

>>> # Get processed tick data csv from url

>>> tick_data_url = "https://raw.githubusercontent.com/hudson-and-thames/example-data/main/processed_tick_data.csv"

>>> tick_bars = standard_data_structures.get_tick_bars(

... tick_data_url, threshold=5500, batch_size=10000, verbose=False

... )

>>> tick_bars

tick_num...

date_time...

2023-03-02...

Volume Bars

- get_volume_bars(file_path_or_df: str | Iterable[str] | DataFrame, threshold: float | Series = 70000000, batch_size: int = 20000000, verbose: bool = True, to_csv: bool = False, output_path: str | None = None, average: bool = False)

-

Creates the volume bars: date_time, open, high, low, close, volume, cum_buy_volume, cum_ticks, cum_dollar_value, average_volume.

Following the paper “The Volume Clock: Insights into the high frequency paradigm” by Lopez de Prado, et al, it is suggested that using 1/50 of the average daily volume, would result in more desirable statistical properties.

- Parameters:

-

-

file_path_or_df – (str/iterable of str/pd.DataFrame) Path to the csv file(s) or Pandas Data Frame containing raw tick data in the format[date_time, price, volume].

-

threshold – (float/pd.Series) A cumulative value above this threshold triggers a sample to be taken. If a series is given, then at each sampling time the closest previous threshold is used. (Values in the series can only be at times when the threshold is changed, not for every observation)

-

batch_size – (int) The number of rows per batch. Less RAM = smaller batch size.

-

verbose – (bool) Print out batch numbers (True or False).

-

to_csv – (bool) Save bars to csv after every batch run (True or False).

-

output_path – (str) Path to csv file, if to_csv is True.

-

average – (bool) If set to True, the average volume traded per candle is added to the output.

-

- Returns:

-

(pd.DataFrame) Dataframe of volume bars.

Example

There is an option to output average volume of matched trades in each volume bar. For this, please set the

average

parameter is to True.

>>> from mlfinlab.data_structures import standard_data_structures

>>> # Get processed tick data csv from url

>>> tick_data_url = "https://raw.githubusercontent.com/hudson-and-thames/example-data/main/processed_tick_data.csv"

>>> volume_bars = standard_data_structures.get_volume_bars(

... tick_data_url, threshold=28000, batch_size=1000000, verbose=False

... )

>>> volume_bars

tick_num...

date_time...

2023-03-02...

>>> # Volume Bars with average volume per bar

>>> volume_bars_average = standard_data_structures.get_volume_bars(

... tick_data_url, threshold=28000, batch_size=1000000, verbose=False, average=True

... )

>>> volume_bars_average

tick_num...average_volume

date_time...

2023-03-02...

Dollar Bars

Tip

-

Dollar bars are the most stable of the 4 types.

-

It is suggested that using 1/50 of the average daily dollar value, would result in more desirable statistical properties

- get_dollar_bars(file_path_or_df: str | Iterable[str] | DataFrame, threshold: float | Series = 70000000, batch_size: int = 20000000, verbose: bool = True, to_csv: bool = False, output_path: str | None = None)

-

Creates the dollar bars: date_time, open, high, low, close, volume, cum_buy_volume, cum_ticks, cum_dollar_value.

Following the paper “The Volume Clock: Insights into the high frequency paradigm” by Lopez de Prado, et al, it is suggested that using 1/50 of the average daily dollar value, would result in more desirable statistical properties.

- Parameters:

-

-

file_path_or_df – (str/iterable of str/pd.DataFrame) Path to the csv file(s) or Pandas Data Frame containing raw tick data in the format[date_time, price, volume].

-

threshold – (float/pd.Series) A cumulative value above this threshold triggers a sample to be taken. If a series is given, then at each sampling time the closest previous threshold is used. (Values in the series can only be at times when the threshold is changed, not for every observation).

-

batch_size – (int) The number of rows per batch. Less RAM = smaller batch size.

-

verbose – (bool) Print out batch numbers (True or False).

-

to_csv – (bool) Save bars to csv after every batch run (True or False).

-

output_path – (str) Path to csv file, if to_csv is True.

-

- Returns:

-

(pd.DataFrame) Dataframe of dollar bars.

Example

>>> from mlfinlab.data_structures import standard_data_structures

>>> # Get processed tick data csv from url

>>> tick_data_url = "https://raw.githubusercontent.com/hudson-and-thames/example-data/main/processed_tick_data.csv"

>>> dollar_bars = standard_data_structures.get_dollar_bars(

... tick_data_url, threshold=10000000, batch_size=10000, verbose=False

... )

>>> dollar_bars

tick_num...

date_time...

2023-03-02...

Statistical Properties

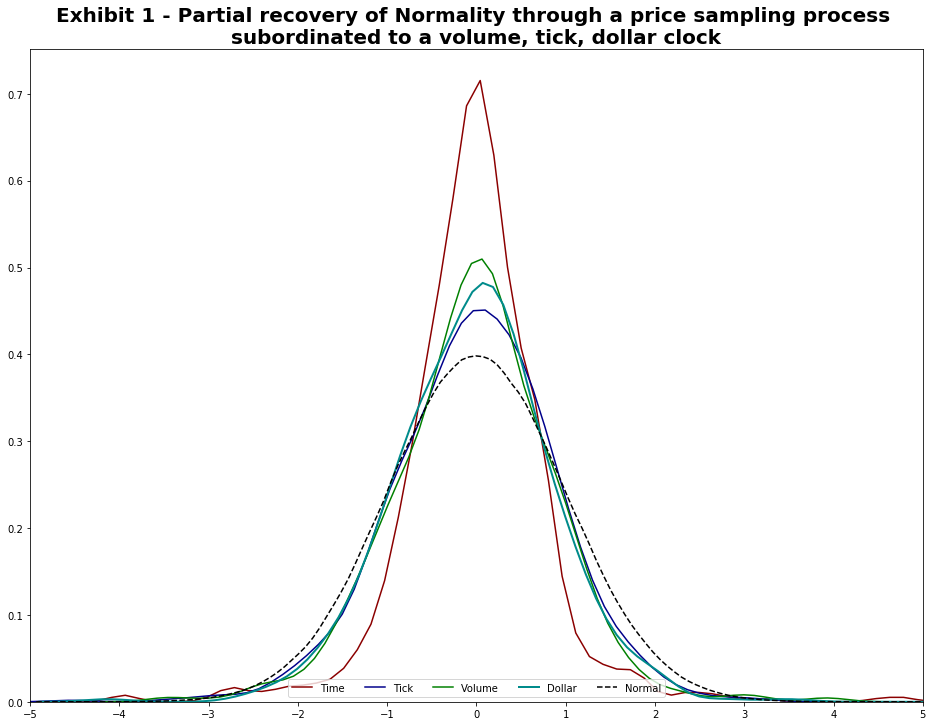

The chart below shows that tick, volume, and dollar bars all exhibit a distribution significantly closer to normal - versus standard time bars:

This matches up with the results of the original paper “The volume clock (2012)”.

Research Notebooks

The following research notebooks can be used to better understand the previously discussed data structures.

Getting Started

ETF Trick Hedge

Futures Roll Trick

Research Articles

Presentation Slides