Entropy Measures

In the context of information science, entropy refers to a measure of the uncertainty or randomness in a set of information. It quantifies the information or surprise contained in a message or data source. By assessing the level of uncertainty or disorder within the data, entropy enables us to quantify the richness or predictability of the information it represents. Therefore, entropy allows us to understand the degree of organization or randomness within a given information system. Markets are imperfect, which means prices are formed with partial information. As a result, entropy measures help determine how much helpful information is contained in said price signals.

This module defines a number of entropy measures that are helpful in determining the amount of information contained in a price signal. They are:

Shannon Entropy

Plug-in (or Maximum Likelihood) Entropy

Lempel-Ziv Entropy

Kontoyiannis Entropy

Note

Underlying Literature

The following sources elaborate extensively on the topic:

-

Advances in Financial Machine Learning, Chapter 18 by Marcos Lopez de Prado. Describes the utility and motivation behind entropy measures in finance in more detail

Note

Range of Entropy Values

Users may wonder why some of the entropy functions can yield values greater than 1. The following StackExchange thread may offer a useful explanation:

Essentially, we calculate the entropy measures using a log function with a base of 2, which has a maximum value that is greater than 1.

The Shannon Entropy

Claude Shannon is credited with providing one of the first conceptualizations of entropy in 1948. Shannon defined entropy as the average amount of information produced by a stationary source of data. Speaking more strictly, we can define entropy as the smallest number of bits per character required to describe a message in a uniquely decodable way.

Formally, the Shannon entropy of a discrete random variable \(X\) with possible values \(x \in A\) is defined as:

with \(0 \leq H[X] \leq \log _{2}[\|A\|]\) where:

-

\(p[x]\) is the probability of \(x\).

-

\(\|A\|\) is the size of set \(A\).

We can interpret \(H[x]\) as the probability weighted average of informational content in \(A\), where the bits of information are measured as \(\log _{2} \frac{1}{p[x]}\). This makes intuitive sense: observing outcomes that occur rarely (i.e. have a low probability) provides more information than observing high-probability outcomes. Said differently, we learn more when something unexpected happens.

Implementation

- get_shannon_entropy(message: str | Series) float

-

Function returns the Shannon entropy of an encoded message (encoding should consist of exclusively 1s and 0s).

- Parameters:

-

message – (str or pd.Series) Encoded message.

- Returns:

-

(float) Shannon entropy.

Example

>>> import pandas as pd

>>> from mlfinlab.microstructural_features import first_generation, entropy

>>> # Reading in the tick data and only storing the closing price

>>> url = "https://raw.githubusercontent.com/hudson-and-thames/example-data/main/tick_bars.csv"

>>> tick_prices = pd.read_csv(url)["close"]

>>> # Generate trade classifications for our tick data

>>> tick_classifications = first_generation.tick_rule(prices=tick_prices)

>>> # Calculate the Shannon entropy of a subset of the tick classifications

>>> entropy.get_shannon_entropy(message=tick_classifications)

0.9...

Plug-in (Maximum Likelihood) Entropy

Gao et al. (2008) expanded on Shannon’s work by conceptualizing the Plug-in measure of entropy, also known as the maximum likelihood estimator of entropy.

Given a data sequence \(x_{1}^{n}\), consisting of a string of values starting in position 1 and ending in position \(n\), we can form a dictionary of all words of length \(w < n\) in that sequence: \(A^w\). Then, we can consider some arbitrary word \(y_{1}^w \in A^w\) of length \(w\). We denote \(\hat{p}_{w}\left[y_{1}^{w}\right]\) the empirical probability of the word \(y_{1}^w\) in \(x_{1}^n\), which means that \(\hat{p}_{w}\left[y_{1}^{w}\right]\) is the frequency with which \(y_{1}^w\) appears in \(x_{1}^n\).

Assuming that the data is generated by a stationary and ergodic process, then the law of large numbers guarantees that, for a fixed \(w\) and a large \(n\), the empirical distribution \(\hat{p}_{w}\) will be close to the true distribution \({p}_{w}\). Under these circumstances, a natural estimator for the entropy rate (i.e. average entropy per bit) is:

Since the empirical distribution is also the maximum likelihood estimate of the true distribution, this is also often referred to as the maximum likelihood entropy estimator. The value \(w\) should be large enough for \(\hat{H}_{n, w}\) to be acceptably close to the true entropy \(H\). The value of \(n\) needs to be much larger than \(w\), so that the empirical distribution of order \(w\) is close to the true distribution.

Implementation

- get_plug_in_entropy(message: str | Series, word_length: int = 1) float

-

Function returns the Plug-In entropy of an encoded message (encoding should consist of exclusively 1s and 0s).

A key component of the algorithm is specifying a constant that represents the average word length in the encoded message. For our purposes, this average will always be 1, since each trade classification is represented by a single digit.

- Parameters:

-

-

message – (Option: str/pd.Series) Encoded message.

-

word_length – (int) Average word length.

-

- Returns:

-

(float) Plug-in entropy.

Example

>>> import pandas as pd

>>> from mlfinlab.microstructural_features import first_generation, entropy

>>> # Reading in the tick data and only storing the closing price

>>> url = "https://raw.githubusercontent.com/hudson-and-thames/example-data/main/tick_bars.csv"

>>> tick_prices = pd.read_csv(url)["close"]

>>> # Generate trade classifications for our tick data

>>> tick_classifications = first_generation.tick_rule(prices=tick_prices)

>>> # Calculate the Plug-in entropy of a subset of the tick classifications

>>> entropy.get_plug_in_entropy(message=tick_classifications)

0.9...

The Lempel-Ziv Complexity

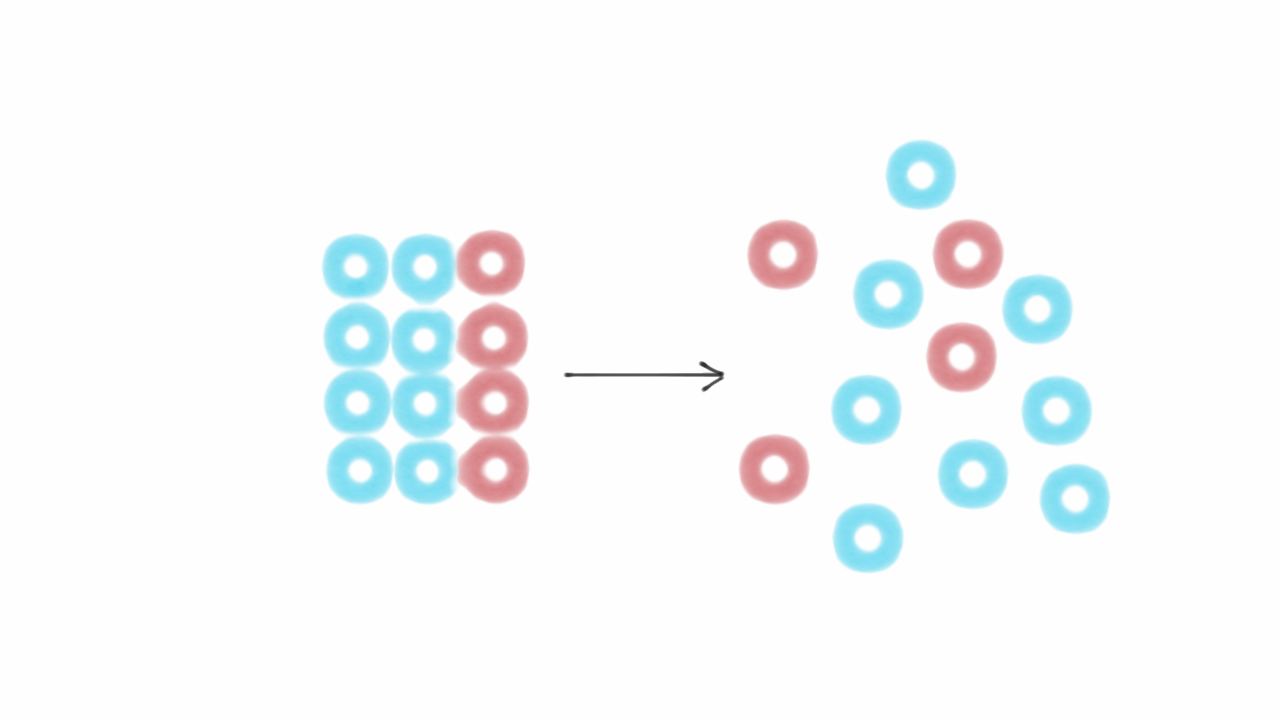

Similar to Shannon entropy, Abraham Lempel and Jacob Ziv proposed in 1978 that information be treated as a measure of complexity. Intuitively this makes sense: a complex sequence contains more information than a regular (predictable) sequence. Based on this idea, the Lempel-Ziv (LZ) algorithm decomposes a message into a number of non-redundant substrings (which is a very different approach to how we calculate Shannon entropy). LZ complexity builds on this idea by dividing the number of non-redundant substrings by the length of the original message. The intuition here is that complex messages have high entropy, which will require large dictionaries of substrings, relative to the length of the original message.

For a detailed explanation of the Lempel-Ziv algorithm, you can view the following resources:

Implementation

- get_lempel_ziv_entropy(message: str | Series) float

-

Function returns the Lempel-Ziv entropy of an encoded message (encoding should consist of exclusively 1s and 0s).

- Parameters:

-

message – (str or pd.Series) Encoded message.

- Returns:

-

(float) Lempel-Ziv entropy.

Example

>>> import pandas as pd

>>> from mlfinlab.microstructural_features import first_generation, entropy

>>> # Reading in the tick data and only storing the closing price

>>> url = "https://raw.githubusercontent.com/hudson-and-thames/example-data/main/tick_bars.csv"

>>> tick_prices = pd.read_csv(url)["close"]

>>> # Generate trade classifications for our tick data

>>> tick_classifications = first_generation.tick_rule(prices=tick_prices)

>>> # Calculate the Plug-in entropy of a subset of the tick classifications

>>> entropy.get_lempel_ziv_entropy(message=tick_classifications)

0.0...

The Kontoyiannis Entropy

In 1998, Kontoyiannis attempted to make more efficient use of the information available in a message by taking advantage of a technique known as length matching.

Let us define \(L_{i}^n\) as 1 plus the length of the longest match found in the \(n\) bits prior to \(i\):

Now, also consider that, in 1993, Ornstein and Weiss formally proved that:

where \(H\) is the measure of entropy. Kontoyiannis uses this result to estimate Shannon’s entropy rate by calculating the average \(\frac{L_{i}^{n}}{\log _{2}[n]}\) and using the reciprocal to estimate \(H\). Intuitively, as we increase the available history, we expect that messages with high entropy will produce relatively shorter non-redundant substrings. In contrast, messages with low entropy will produce relatively longer non-redundant substrings.

Implementation

- get_konto_entropy(message: str | Series, window_size: int | None = None) float

-

Function returns the Kontoyiannis entropy of an encoded message (encoding should consist of exclusively 1s and 0s).

A fixed window size can be specified for the calculation of the Kontoyiannis entropy. If no window size is specified then an expanding window is used.

- Parameters:

-

-

message – (Option: str/pd.Series) Encoded message.

-

window_size – (int) Window length to be used.

-

- Returns:

-

(float) Kontoyiannis entropy.

Example

>>> import pandas as pd

>>> from mlfinlab.microstructural_features import first_generation, entropy

>>> # Read in the tick data and only storing the closing price

>>> url = "https://raw.githubusercontent.com/hudson-and-thames/example-data/main/tick_bars.csv"

>>> tick_prices = pd.read_csv(url)["close"]

>>> # Generate trade classifications for our tick data

>>> tick_classifications = first_generation.tick_rule(prices=tick_prices)

>>> # Calculate the Kontoyiannis entropy of a subset of the tick classifications

>>> entropy.get_konto_entropy(message=tick_classifications.iloc[:100])

0.86...

Message Encoding

Entropy is used to measure the average amount of information produced by a source of data. In financial machine learning, we can calculate entropy for tick sizes, tick rule series, and percent changes between ticks. Estimating entropy requires encoding the data. We can apply either a binary (usually applied to tick rule), quantile or sigma encoding. Quantile and sigma encoding use an additional function to encode the dictionaries into a message. This message is then passed to the various functions that calculate the entropy measure.

Binary Encoding

In the case of a series of returns derived from price bars (i.e., bars containing prices fluctuating between two symmetric horizontal barriers, centered around the start price), binary encoding occurs naturally because the value of \(|r_{t}|\) is roughly constant. For example, a stream of returns \(r_{t}\) can be encoded according to the sign, with 1 indicating \(r_{t} > 0\) and \(0\) indicating \(r_{t} < 0\), thus eliminating occurrences where \(r_{t} = 0\).

Implementation

- encode_tick_rule_array(tick_rule_array: list) str

-

Encode array of tick signs (-1, 1, 0).

- Parameters:

-

tick_rule_array – (list) Tick rules.

- Returns:

-

(str) Encoded message.

Example

>>> import pandas as pd

>>> import numpy as np

>>> from mlfinlab.microstructural_features.encoding import encode_tick_rule_array

>>> from mlfinlab.microstructural_features import entropy

>>> url = "https://raw.githubusercontent.com/hudson-and-thames/example-data/main/tick_bars.csv"

>>> tick_prices = pd.read_csv(url)["close"]

>>> # Generate trade classifications for our tick data

>>> tick_classifications = first_generation.tick_rule(prices=tick_prices)

>>> values = tick_classifications.values

>>> message = encode_tick_rule_array(values)

>>> # Calculate the plug-in entropy as an example

>>> plug_in_entropy_binary_message = entropy.get_plug_in_entropy(message=message)

>>> plug_in_entropy_binary_message

0.99...

Quantile Encoding

Unless price bars are employed, more than two codes are likely to be required. One method of dealing with this is to assign a code to each \(r_{t}\) based on the quantile to which it belongs. We use the in-sample (training) period to calculate the quantile limits. Some codes span a larger portion of \(r_{t}\)’s range than others when utilizing the approach. Since the data is divided into quantiles, we expect a relatively uniform (in-sample) or nearly uniform (out-of-sample) code distribution. This should help increase our average entropy estimates.

Implementation

- quantile_mapping(array: list, num_letters: int = 26) dict

-

Generate dictionary of quantile-letters based on values from array and dictionary length (num_letters).

- Parameters:

-

-

array – (list) Values to split on quantiles.

-

num_letters – (int) Number of letters(quantiles) to encode.

-

- Returns:

-

(dict) Dict of quantile-symbol.

- encode_array(array: list, encoding_dict: dict) str

-

Encode array with strings using encoding dict, in case of multiple occurrences of the minimum values, the indices corresponding to the first occurrence are returned

- Parameters:

-

-

array – (list) Values to encode.

-

encoding_dict – (dict) Dict of quantile-symbol.

-

- Returns:

-

(str) Encoded message.

Example

>>> import pandas as pd

>>> import numpy as np

>>> from mlfinlab.microstructural_features.encoding import quantile_mapping, encode_array

>>> from mlfinlab.microstructural_features import entropy

>>> url = 'https://raw.githubusercontent.com/hudson-and-thames/example-data/main/tick_bars.csv'

>>> tick_prices = pd.read_csv(url)['close']

>>> # Generate trade classifications for our tick data

>>> tick_classifications = first_generation.tick_rule(prices=tick_prices)

>>> values = tick_classifications.values

>>> quantile_dict = quantile_mapping(values, num_letters=10)

>>> message = encode_array(values, quantile_dict)

>>> # Calculate the plug-in entropy as an example

>>> plug_in_entropy_quantile_message = entropy.get_plug_in_entropy(message=message)

>>> plug_in_entropy_quantile_message

0.99...

Sigma Encoding

Rather than limiting the amount of codes, we can instead allow the price stream to define the vocabulary. Let’s assume we want to fix a discretization step, \({\sigma}\). Then we assign 0 to \(r_{t} \in[\min \{r\}, \min \{r\}+\sigma), 1\) to \(r_{t} \in\) \([\min \{r\}+\sigma, \min \{r\}+2 \sigma\) (i.e. we “slice” our range of returns into equally-wide bins) until every observation has been encoded with a total of ceil \(\left[\frac{\max \{r\}-\min \{r\}}{\sigma}\right]\).

Unlike quantile encoding, each code now covers the same fraction of the range of \(r_{t}\)’s. Entropy estimates will be smaller than in quantile encoding on average because codes are not uniformly distributed; however, the introduction of a “rare” code will cause entropy measurements to substantially increase.

Implementation

- sigma_mapping(array: list, step: float = 0.01) dict

-

Generate dictionary of sigma encoded letters based on values from array and discretization step.

- Parameters:

-

-

array – (list) Values to split on quantiles.

-

step – (float) Discretization step (sigma).

-

- Returns:

-

(dict) Dict of value-symbol.

Example

>>> import pandas as pd

>>> import numpy as np

>>> from mlfinlab.microstructural_features.encoding import sigma_mapping, encode_array

>>> from mlfinlab.microstructural_features import entropy

>>> url = "https://raw.githubusercontent.com/hudson-and-thames/example-data/main/tick_bars.csv"

>>> tick_prices = pd.read_csv(url)["close"]

>>> # Generate trade classifications for our tick data

>>> tick_classifications = first_generation.tick_rule(prices=tick_prices)

>>> values = tick_classifications.values

>>> sigma_dict = sigma_mapping(values, step=1)

>>> message = encode_array(values, sigma_dict)

>>> # Calculate the plug-in entropy as an example

>>> plug_in_entropy_sigma_message = entropy.get_plug_in_entropy(message=message)

>>> plug_in_entropy_sigma_message

0.99...

Research Notebook

The following research notebook can be used to better understand the entropy measures covered in this module