History Weighted Regression

Intuitively, when we look at history data to make a reasonable guess of the current situation, we tend to at first identify similar cases to our current situation. For example, say we are trying to predict a stock price with some companies’ fundamental data (could be more than 1 company). Then amongst those similar cases, we tend to take a deeper look at those that are farther away from the historical mean, because they usually bear more interesting information, and the average cases are plenty and they could just fluctuate due to noise.

Note

This documentation is based on the following three papers written by Megan Szasonis, Mark Kritzman and David Turkington.

We mostly implement by choosing the consensus used in [Relevance] to be more consistent in notations.

Introduction and Ideas

The history weighed regression method proposed by Megan Szasonis, Mark Kritzman and David Turkington, has the following three key features, as written in [Addition by Subtraction]:

-

The prediction from a linear regression equation is mathematically equivalent to a weighted average of the past values of the dependent variable, in which the weights are the relevance of the independent observations.

-

Relevance within this context is defined as the sum of statistical similarity and informativeness, both of which are defined as Mahalanobis distances.

-

Together, these features allow researchers to censor less relevant observations and derive more reliable predictions of the dependent variable.

Simply speaking, this is a method that selects a subsample based on how relevant each history instance in the training set is to our test instance, and run prediction on the subsample (i.e., a given percentage of all events ranked by relevance). Moreover, when one chooses to use run prediction over all events (i.e., 100% of all events ranked by relevance), this method’s result coincide with OLS.

Definitions and Concepts

Note

To keep the notations consistent in this document, we assume all vectors are column vectors (the common way in linear algebra textbooks, though not in machine learning books), and an n-by-k matrix storing data has n instances and k features (the usual way people implement in pandas.).

Mahalanobis Distances

Suppose we have an N-by-k data matrix \(X\), with \(N\) many instances and \(k\) features. Think about \(X\) as our training set. Then we can calculate the features covariance matrix (k-by-k) as

Assuming the \(\Omega\) is symmetric positive definite (i.e., no 0 variances), then we can calculate the Fisher information matrix \(\Omega^{-1}\) (though strictly speaking it is only true asymptotically in the frequentist sense, but we borrow the name here anyway).

The Mahalanobis Distance of two instances \(x_i, x_j\) are defined as follows in the quadratic form:

because \(\Omega^{-1}\) is also symmetric and positive definite.

Intuitively, because \(\Omega\) records the variances among our data for each feature around the mean, \(\Omega^{-1}\) records how tight the training data are for each feature around the mean. Therefore, \(x^T \Omega^{-1} x\) is the amount of information along the direction \(x\): The larger the value, the larger the amount of information. Hence \(d_M(x_i, x_j)\) quantifies the amount of information given the training data between two specific instances \((x_i, x_j)\), and the two instances are arbitrary as they do not have to all come from training set or test set, though we often assume \(x_i\) to be a past instance and \(x_j\) to be a test instance for interpretability.

Similarity

Now we define the similarity between two instances \((x_i, x_j)\) as the negative of their Mahalanobis distance:

Trivially, when \(x_i = x_j\) then they are the most similar pair and the similarity will become 0, the largest value. This quantity measures how similar two instances are, based on our training set. The larger the value, the more similar they are, and as a result the less information one can get.

Informativeness

Remember that \(x^T \Omega^{-1} x\) is the amount of information along the direction \(x\) away from the mean, we can therefore define the informativeness as below:

where \(\bar{x}\) is the mean of our training data \(X\) for each feature. The larger the value, the less similar \(x_i\) is to the mean, and therefore the more information it brings.

Note

Similarity and informativeness are addressing different aspects of the data. They are in some sense the opposite of each other but with some nuances here: similarity is used to measure between two instances whereas informativeness is used to measure between one instance to the training data. We will combine the two together to form the key value for the history weighted regression method called relevance.

Relevance and Subsample

Now we can define the relevance value between two instances \((x_i, x_t)\) as follows:

Relevance is interpreted as the sum of similarity and informativeness for \((x_i, x_t)\), where \(x_i\) is in the training set, and \(x_t\) is a test instance. Intuitively, when the in-sample instance and the test instance pair \((x_i, x_t)\) are similar and give great information, their relevance value will be greater.

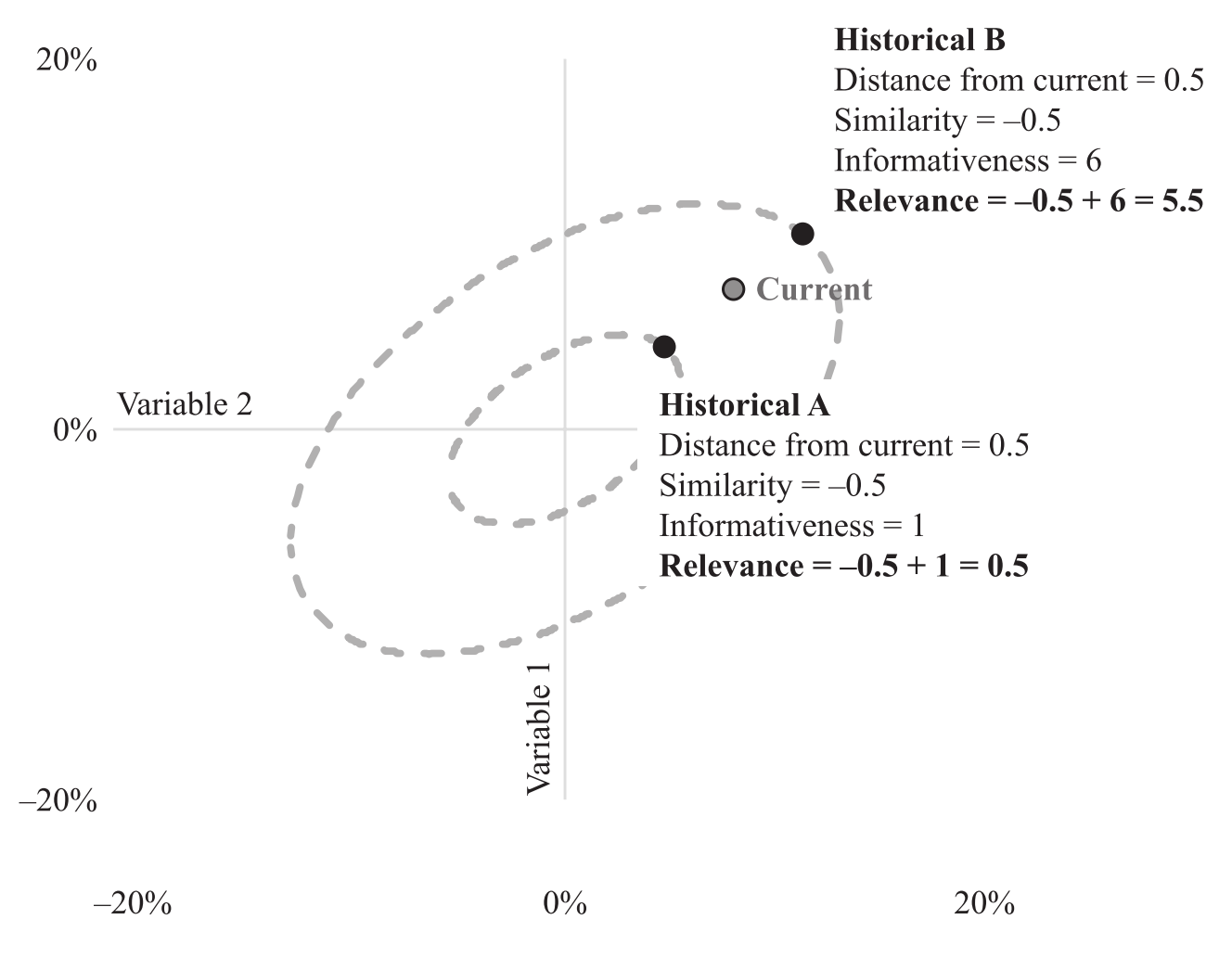

Indeed, relevance is one of the values to quantify the thought process we had in the beginning for guessing the stock’s price using companies fundamental data. The figure below gives a good explanation on relevance. Note that the two history events are on their elliptical orbits of fixed amounts of informativeness.

An illustration of the relationship between similarity, informativeness and relevance. We choose the historical event B to be more relevant to our current case than event A. (Figure from [Addition by Subtraction])

Prediction

Now we have a instance in independent variable \(x_t\), we aim to make a prediction \(\hat{y}_t\) based on \(x_t\). Say the training data for independent variable is in the matrix N-by-K \(X\) and the dependent variable is in the N-by-1 vector \(Y\).

Workflow

-

Calculate all relevance between \(x_t\) and each row of \(X\): \(r_{it}\) where \(i = 1,\cdots, N\).

-

Rank all the instances in relevance and pick the instances in top \(q \in (0, 1]\) quantiles to form a subsample with \(n\) instances.

-

Make prediction using the formula

\[\hat{y}_t = \bar{y} + \frac{1}{n-1} \sum_{i=1}^n r_{it} (y_i - \bar{y})\]where \(\bar{y}\) is the subsample average of the dependent variable.

We can read the formula above in two parts: First, when no information is given about \(X\), the best prediction we can formulate for the unknown \(y_t\) is \(\bar{y}\). The second part can be interpreted as a weighted average by relevance of historical deviations for the dependent variable, and the weights are computed by \(x_i, x_t, i=1, \cdots, n\).

Tip

It is a good practice to rescale \(X\) for each feature, for example, by the number of standard deviations from its mean. This will in general produce a covariance matrix with smaller condition numbers, and thus for the Fisher information matrix since their condition numbers are equal. A smaller condition number is more stable numerically in the calculation, especially when we initially calculate the inverse of the covariance matrix. Because every step for prediction is linear, the final prediction will not change, and do not need to re-scale.

Connection with OLS

We aim to show that, when we do not reduce to a subsample (i.e., \(q=1\) or equivalently \(n=N\)), then the above prediction is identical with prediction given by OLS. First, without loss of generality assume \(X\) and \(Y\) have means of 0. Then plug

into the prediction formula, we get

Where \(X_{sub}\) and \(Y_{sub}\) are the associated subsamples selected by relevance with \(x_t\). Since we assume \(q=1\), equivalently \(n=N\), we have

which is the formula for OLS.

Summary and Comments

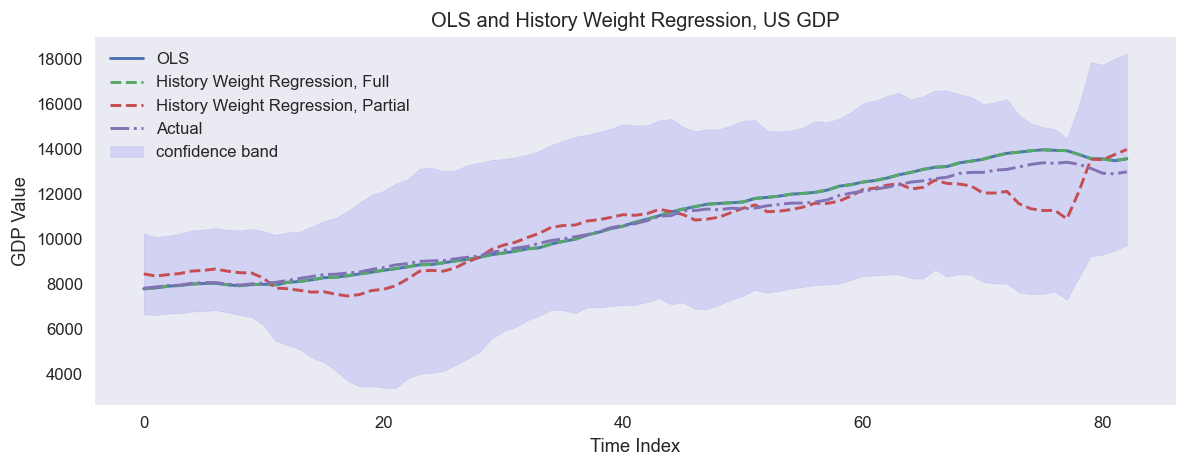

An example of predicting US quarterly GDP in 1988-2009 using quarterly fundamental data from 1959-1987: real personal consumption expenditures, real federal consumption expenditures & gross investment, unemployment rate and M1 (seasonally adjusted). The full sample regression collides with OLS.

Most of the summaries and comments below are taken directly from the three articles, though may be worded slightly differently.

-

The prediction from a linear regression equation is mathematically equivalent to a weighted average of past values of the dependent variable in which the weights are the relevance of the independent variables.

-

This equivalence allows one to form a relevance-weighted prediction of the dependent variable by using only a subsample of relevant observations. This approach is called partial sample regression.

-

Like partial sample regression, an event study separates relevant observations from non-relevant observations, but it does so by identification rather than mathematically.

-

We should also note that our approach is different from weighted least squares regression, which uses fixed weights regardless of the data point being predicted and applies the weights to calculate the covariance matrix among predictors.

-

This regression method is different from performing separate regressions on subsamples of the most relevant observations; in a separate regression approach, the covariance matrix used for estimation would also be based on the subsample, whereas we always use the full-sample covariance matrix.

-

The calculation is invariant under linear re-scale of \(X\). Rescale to get a better condition number for \(\Omega^{-1}\).

-

This method is conceptually different from PCA, where feature importance is considered. This regression method considers importance in instances, not features. However, one can combine the PCA transformation and the partial sample regression, and if all principal components are considered, the prediction result will be identical compared to directly working with \(X\).

Implementation

- class HistoryWeightRegression(Y_train: array, X_train: array, check_condi_num: bool = False)

-

The class that houses all related methods for the historically weighted regression tool.

- __init__(Y_train: array, X_train: array, check_condi_num: bool = False)

-

Instantiate the class with data.

- Parameters:

-

-

Y_train – (np.array) The 1D (n, ) dependent data vector.

-

X_train – (np.array) The 2D (n-by-k) independent data vector, n: num of instances, k: num of variables or features.

-

check_condi_num – (bool) Optional. Whether to check the condition number of the covariance matrix and fisher info matrix from the training data X (Their values are the same). If this number is too large then it may lead to numerical issues. Defaults to False. Toggle this off to save some computing time.

-

- __weakref__

-

list of weak references to the object (if defined)

- calc_info(x_i: array, fisher_info_mtx: array | None = None) float

-

Calculate the informativeness of x_i: info(x_i)

info(x_i) := 1/2 * (x_i - x_avg).T @ fisher_info @ (x_i - x_avg) Here x_avg is the training data average for each column.

- Parameters:

-

-

x_i – (np.array) 1D (k, ) dependent data vector for an instance where k is the number of features.

-

fisher_info_mtx – (np.array) Optional. 2D (k, k) matrix for the whole training data. Defaults to the fisher info matrix stored in the class calculated using training data.

-

- Returns:

-

(float) The informativeness value.

- calc_relevance(x_i: array, x_j: array, fisher_info_mtx: array | None = None) float

-

Calculate relevance of x_i and x_j: r(x_i, x_j).

r(x_i, x_j) := sim(x_i, x_j) + info(x_i) + info(x_j)

- Parameters:

-

-

x_i – (np.array) 1D (k, ) dependent data vector for an instance where k is the number of features.

-

x_j – (np.array) 1D (k, ) dependent data vector for an instance where k is the number of features.

-

fisher_info_mtx – (np.array) Optional. 2D (k, k) matrix for the whole training data. Defaults to the fisher info matrix stored in the class calculated using training data.

-

- Returns:

-

(float) The relevance value.

- calc_sim(x_i: array, x_j: array, fisher_info_mtx: array | None = None) float

-

Calculate the similarity of x_i and x_j: sim(x_i, x_j)

sim(x_i, x_j) := -1/2 * (x_i - x_j).T @ fisher_info @ (x_i - x_j)

- Parameters:

-

-

x_i – (np.array) 1D (k, ) dependent data vector for an instance where k is the number of features.

-

x_j – (np.array) 1D (k, ) dependent data vector for an instance where k is the number of features.

-

fisher_info_mtx – (np.array) Optional. 2D (k, k) matrix for the whole training data. Defaults to the fisher info matrix stored in the class calculated using training data.

-

- Returns:

-

(float) The value of similarity.

- find_subsample(x_t: array, relev_ratio_threshold: float = 1, above: bool = True) Tuple[array, array, array, float]

-

Find the subsamples of X and Y in the training set by relevance above or below a given threshold with x_t.

For example, if relev_ratio_threshold=0.3, above=True, then it finds the top 30 percentile. If relev_ratio_threshold=0.3, above=False, then it finds the bottom 70 percentile.

The standard deviation is calculated as the sqrt of the variance of y_t hat, the prediction w.r.t. x_t: var_yt_hat = [(n-1)/n^2 * var_y] + [1/n * y_mean^2] + [var_y/n + y_mean^2/(n-1)]*var_r, where var_y is the subsample variance of Y, y_mean is the subsample average of Y, var_r is the subsample variance of relevance.

- Parameters:

-

-

x_t – (np.array) A single row element test data, 1D (k, 1). k is the number of features.

-

relev_ratio_threshold – (float) Optional. The subsample ratio to use for predicting values ranked by relevance, must be a number between [0, 1].

-

above – (bool) Optional. Whether to find the subsample above the threshold or below the threshold.

-

- Returns:

-

(Tuple[np.array, np.array, np.array, float]) The subsample for X, for Y, the corresponding indices to select the subsample and the std.

- get_fit_result() dict

-

Fit result and statistics using the training data.

- Returns:

-

(dict) The fit result and associated statistics.

- predict(X_t: array, relev_ratio_threshold: float = 1) array

-

Predict the result using fitted model from a subsample chosen by the ratio of relevance.

For example, if relev_ratio_threshold = 0.4, then it chooses the top 40 percentile data ranked by relevance to x_t. This method returns the prediction in column 0, also returns the associated prediction standard deviations in the column 1.

For each row element x_t in X_t we have the following: y_t := y_avg + 1/(n-1) * sum{relevance(x_i, x_t) * (y_i - y_avg), subsample} where y_i, x_i are from subsamples. The matrix form is: y_t := y_avg + 1/(n-1) * (x_t - x_avg).T @ fisher_info_mtx @ (X_sub - x_avg).T @ (y_sub - y_avg)

- Parameters:

-

-

X_t – (np.array) The 2D (n_t-by-k) test data, n_t is the number of instances, k is the number of variables or features.

-

relev_ratio_threshold – (float) Optional. The subsample ratio to use for predicting values ranked by relevance, must be a number between [0, 1]. For example, 0.6 corresponds to the top 60 percentile data ranked by relevance to x_t. Defaults to 1.

-

- Returns:

-

(np.array) The predicted results in col 0, and standard deviations in col 1.

- predict_one_val(x_t: array, relev_ratio_threshold: float = 1) Tuple[float, float]

-

Predict one value using fitted model from a subsample chosen by the ratio of relevance.

For example, if relev_ratio_threshold = 0.4, then it chooses the top 40 percentile data ranked by relevance to x_t. This method also returns the associated prediction standard deviations.

y_t := y_avg_sub + 1/(n-1) * sum{relevance(x_i, x_t) * (y_i - y_avg_sub), subsample} where y_i, x_i are from subsamples. The equivalent matrix form is: y_t := y_avg_sub + 1/(n-1) * (x_t - x_avg).T @ fisher_info_mtx @ (X_sub - x_avg).T @ (y_sub - y_avg_sub)

- Parameters:

-

-

x_t – (np.array) A single row element test data, 1D (k, 1). k is the number of features.

-

relev_ratio_threshold – (float) Optional. The subsample ratio to use for predicting values ranked by relevance, must be a number between [0, 1]. For example, 0.6 corresponds to the top 60 percentile data ranked by relevance to x_t. Defaults to 1.

-

- Returns:

-

(Tuple[float, float]) The predicted result and associated standard deviation.

Example

# Import libraries

from mlfinlab.regression.history_weight_regression import HistoryWeightRegression

from sklearn import preprocessing

import pandas as pd

import numpy as np

import statsmodels.api as sm

# Get data: US macroeconomic data

# Only scale X according to the training data, keep Y the same

data = sm.datasets.macrodata.load_pandas().data

X = data[['realcons', 'realgovt', 'unemp', 'm1']].to_numpy()

Y = data['realgdp'].to_numpy()

# Training data

training_len = 120

X_train = X[:training_len]

Y_train = Y[:training_len]

# Scale the training data in X

scaler_X = preprocessing.StandardScaler()

scaler_X.fit(X_train)

X_train_scale = scaler_X.transform(X_train) # Transform X training data

# Test Data, scale the test data in X

X_t_scale = scaler_X.transform(X[training_len:]) # Transform X test data

Y_t = Y[training_len:]

# Instantiate the regression class with training data

hwr = HistoryWeightRegression(Y_train=Y_train, X_train=X_train_scale)

# Check the matrices and condition numbers

print(hwr.get_fit_result())

# Predict one value, top 0.7 quantile relevance

x_t = X_t_scale[2]

prediction, std = hwr.predict_one_val(x_t=x_t, relev_ratio_threshold=0.7)

# Predict a bunch of values, top 0.7 quantile relevance

# Col0 is the prediction, col1 is the prediction standard deviation

predictions = hwr.predict(X_t=X_t_scale, relev_ratio_threshold=0.7)

Research Notebooks

The following research notebook can be used to better understand the History Weighted Regression.